Reliability Starts with the Right Prompt Format

It’s not the model – it’s how you’re talking to it.

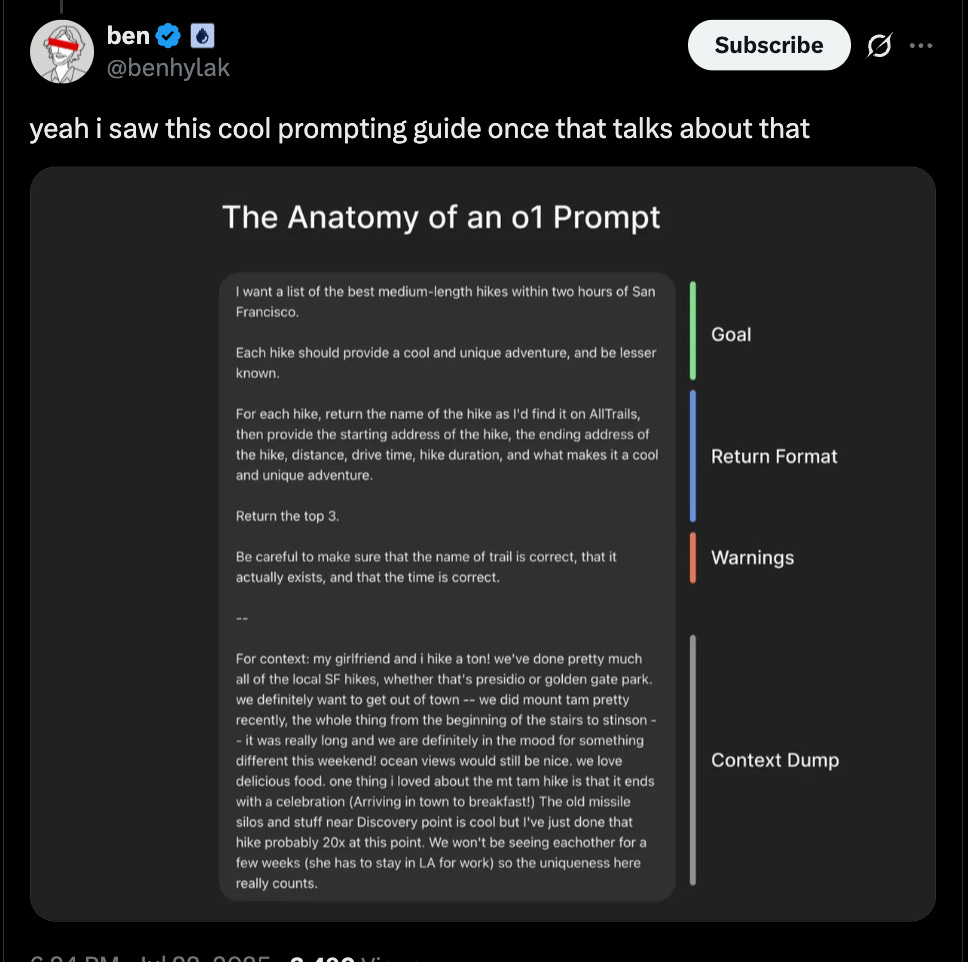

For a while tis tweet about the “Anatomy of an o1 Prompt” was my north star when it came to prompting.

It was simple, powerful, and worked well in natural language tasks especially for search, summarization, and even generating creative writing.

It helped define the core components of a good prompt: goal, return format, context, and constraints.

But when I started working more deeply with Cursor for code generation and pair programming with AI, I realized something important:

The o1 prompt doesn’t work well for coding.

Why the o1 Prompt Fails in Cursor

Here’s why the o1 format - while great for general-purpose tasks - breaks down in code environments like Cursor:

❌ No role definition:

Without specifying the tech stack or developer level, the AI might guess wrong (e.g. suggest Flask when you’re using Next.js).

❌ No scope boundaries:

It might touch unrelated files, rename things you didn’t mean to change, or—worse—alter the DB schema.

❌ No memory leverage:

The model doesn't reference or use past architecture decisions, internal documentation, or established workflows.

❌ Wasted tokens on output formatting:

Cursor already gives you code diffs. You don’t need to ask for it explicitly.

And so, after hitting these limitations repeatedly, I started crafting my own system, what I now call:

The Anatomy of a Cursor Prompt

After building 30+ apps with Cursor, this is how I prompt now. It’s not a rulebook, it’s what actually works for me.

I broke it down into 7 essential parts, each acting like a guardrail to keep the AI laser-focused:

Role / Stack

“You are a senior Next.js developer.”

This sets the tone and context. The AI behaves like a dev familiar with your codebase and conventions.Goal

“Add email–password auth using Supabase Auth.”

Clearly defined and scoped mission.Scope & Constraints

“Only edit files in app/controllers and app/services. No DB schema changes.”

This prevents code leakage or touching unrelated logic.Context

“@file app/controllers/signup_controller.rb @paste console_log.txt”

Gives the AI the current code and the error logs—so it's not guessing.Plan

“Make a clear TODO list before you code. Include each major step you plan to take.”

Forces structured reasoning before it starts writing.Memory Recall

“@memory-docs/architecture/authentication.md”

Pulls in your own system knowledge, past decisions, or architecture docs.Verification

“Test this with a unit test.”

Ensures it works before you even review the code.

Why it Matters

This structure gives the model the clarity it needs to produce focused, high-quality output.

It also makes your prompts versatile and reusable across different tasks.

More importantly, it makes them reliable.

If Cursor keeps editing the wrong files, changing things it shouldn’t, or misunderstanding what you meant…it’s not the model’s fault.

What you're missing is structure.

This format solves that by giving the model guardrails: clear goals, tight scope, and the right context.

So instead of guessing what you want, and how you want it, it executes with precision.

What I’ve Been Up To

On Friday, I thought deeply about a question that’s been bugging me:

“How do we give AI the same intuition people have after spending years in a role?”

That line of thinking led me to this insight:

Humans recall experience when needed. AI knows everything all at once.

We navigate the world by selectively remembering, while AI floods itself with all knowledge every time.

That gap inspired something new:

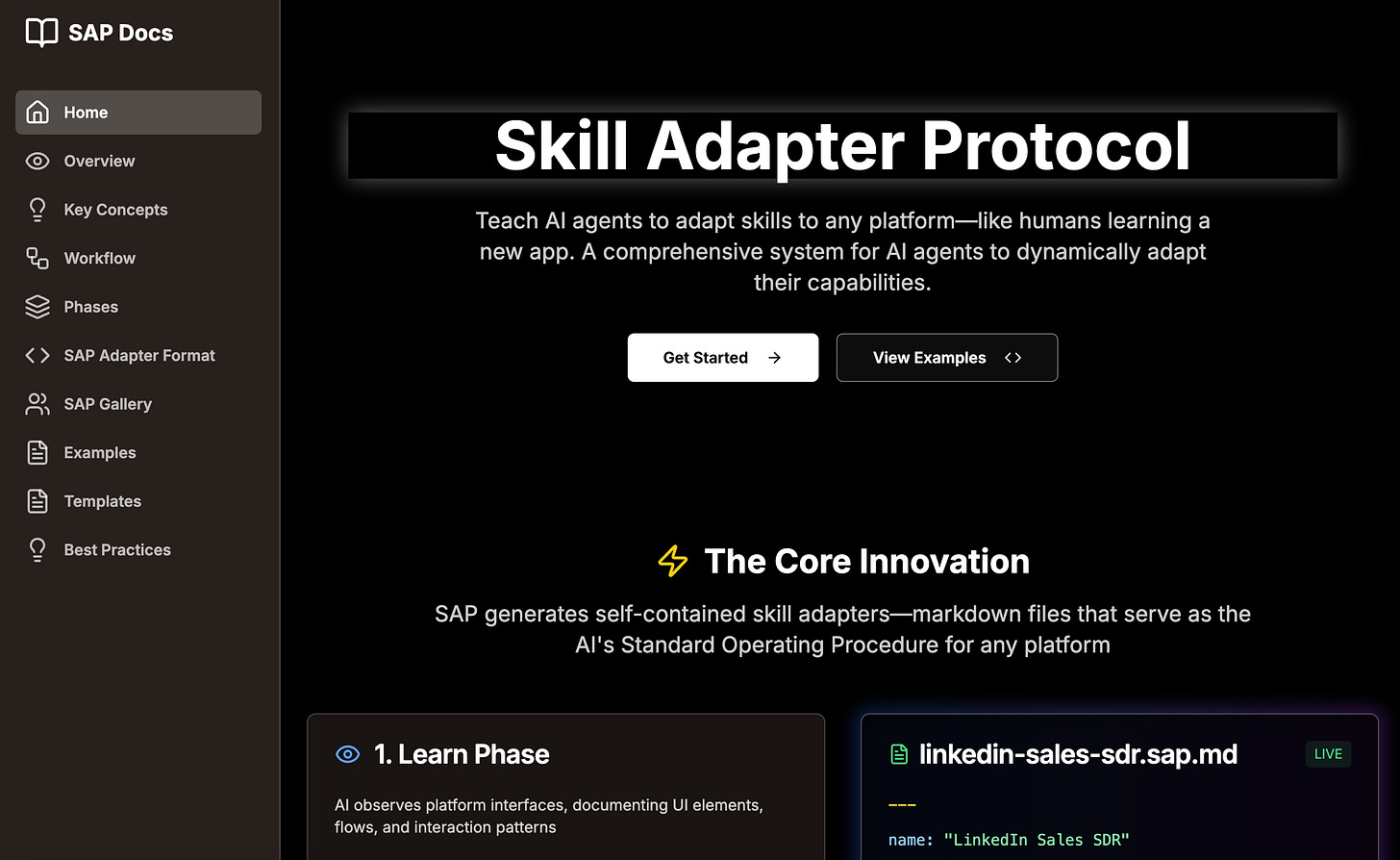

Introducing the Skill Adapter Protocol (SAP)

SAP is a new framework I’m building to let AI agents adapt to any tool or platform like a skilled employee would. Imagine an AI saying:

“I’m a product manager using Notion. Let me load the Notion-SAP.”

Boom. It instantly knows how to operate it like a pro even if the UI changed last week.

I’ve been sharing a direct application of this on this thread on X/twitter: @a16sap on Twitter

🧵 Full thread here

This is just the beginning, but for this to scale... I needed to fix one more thing

The M×N Problem and the SAP Hub

There are so many roles and so many platforms to think about. I can’t cover them all alone.

On top of that, everyone might have their definition of a role and that’s a big problem:

The MxN problem:

M (roles) × N (platforms) = Too many combinations for hardcoding skills.

To resolve this, I’m building the Skill Adapter Protocol Hub, where any agent can download the right protocol instantly.

It’ll be open so the community can feed new SAPs as tools evolve.

Right now I figure out a way for agent to learn this on their own but eventually, agents won’t even need retraining.

There will be so many SAP that training would be redundant.

They’ll just find the latest SAP, observe a new workflow, and regenerate their job playbook.

I genuinely believe there’s something new here.

This is a protocol that shifts how AI agents learn and operate.

It’s the kind of thing that feels obvious in hindsight, but no one’s built it yet.

It reminds me of how the Memory Control Protocol (MCP) showed up quietly before becoming a turning point.

I think the Skill Adapter Protocol (SAP) could be one of those moments too.

Back in 1950, Alan Turing - one of the founding minds of modern computing - asked a bold question:

“Can machines think?”

To explore it, he proposed something now known as the Turing Test. Here’s how it works:

A human talks to two unseen entities: one is another human, the other is a machine.

If the human can’t reliably tell which is which, the machine is said to have passed the test.

It wasn’t about consciousness or emotions. It was about conversation and deception. Could a machine imitate a human well enough to fool us?

We’ve blown past the Turing Test. And we did it quietly.

Just open ChatGPT, Claude, Gemini, or Perplexity today and start a chat.

Ask about philosophy, relationships, productivity hacks, or how to debug a React ap and the AI responds just like a person would (often better).

In fact AI models regularly fool people into thinking they’re human on forums, support chat and in DMs.

SAP has allowed me to work with an AI agent the way I always wished AI would work.

I’ve never written a research paper before.

But I’m writing this one - because sometimes when you’re the first to see something, your job isn’t to wait for recognition.

It’s to make the case clear and loud, and let the work lead the way.

And when you know you’re onto something important - and nobody sees it yet- you make your own visibility.

Wishing you a wonderful weekend.

Learn it to make it.

Cheers

Richardson